Swin transformers are robust to distribution and concept drift in endoscopy-based longitudinal rectal cancer assessment Conference Paper

| Authors: | Gomez, J. T.; Rangnekar, A.; Williams, H.; Thompson, H. M.; Garcia-Aguilar, J.; Smith, J. J.; Veeraraghavan, H. |

| Title: | Swin transformers are robust to distribution and concept drift in endoscopy-based longitudinal rectal cancer assessment |

| Conference Title: | Medical Imaging 2025: Image Processing |

| Abstract: | Endoscopic images are used at various stages of rectal cancer treatment starting from cancer screening and diagnosis, during treatment to assess response and toxicity from treatments such as colitis, and at follow-up to detect new tumor or local regrowth. However, subjective assessment is highly variable and can underestimate the degree of response in some patients, subjecting them to unnecessary surgery, or overestimating response that places patients at risk of disease spread. Advances in deep learning have shown the ability to produce consistent and objective response assessments for endoscopic images. However, methods for detecting cancers, regrowth, and monitoring response during the entire course of patient treatment and follow-up are lacking. This is because automated diagnosis and rectal cancer response assessment require methods that are robust to inherent imaging illumination variations and confounding conditions (blood, scope, blurring) present in endoscopy images as well as changes to the normal lumen and tumor during treatment. Hence, a hierarchical shifted window (Swin) transformer was trained to distinguish rectal cancer from normal lumen using endoscopy images. Swin, as well as two convolutional (ResNet-50, WideResNet-50), and the vision transformer architectures, were trained and evaluated on follow-up longitudinal images to detect LR on in-distribution (ID) private datasets as well as on out-of-distribution (OOD) public colonoscopy datasets to detect pre/non-cancerous polyps. Color shifts were applied using optimal transport to simulate distribution shifts. Swin and ResNet models were similarly accurate in the ID dataset. Swin was more accurate than other methods (follow-up: 0.84, OOD: 0.83), even when subject to color shifts (follow-up: 0.83, OOD: 0.87), indicating the capability to provide robust performance for longitudinal cancer assessment. © 2025 SPIE. |

| Keywords: | chemotherapy; follow up; neurosurgery; cancer diagnosis; cancer screening; endoscopy; arthroplasty; diseases; cryosurgery; rectal cancer; robotic surgery; electrotherapeutics; transplantation (surgical); transplants; noninvasive medical procedures; longitudinal analysis; cardiovascular surgery; medicaments; concept drifts; endoscopy images; robustness to distribution; color shifts; endoscopic image; endoscopy image |

| Journal Title | Progress in Biomedical Optics and Imaging - Proceedings of SPIE |

| Volume: | 13406 |

| Conference Dates: | 2025 Feb 17-20 |

| Conference Location: | San Diego, CA |

| ISBN: | 1605-7422 |

| Publisher: | SPIE |

| Date Published: | 2025-01-01 |

| Start Page: | 134061N |

| Language: | English |

| DOI: | 10.1117/12.3046794 |

| PROVIDER: | scopus |

| DOI/URL: | |

| Notes: | Conference paper -- ISBN: 9781510685901 -- Source: Scopus |

Altmetric

Citation Impact

BMJ Impact Analytics

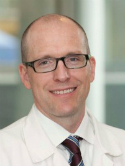

MSK Authors

-

355

355Garcia Aguilar -

163

163Veeraraghavan -

231

231Smith -

45

45Thompson -

27

27Williams -

12

12Rangnekar

Related MSK Work